Observability

Cost Monitoring

While most standard LLM APIs return usage metrics (like input and output token counts), they typically don't provide the actual monetary cost of the request. Developers are often left to calculate this themselves, requiring them to maintain and apply up-to-date pricing information for each model.

WorkflowAI simplifies cost tracking by automatically calculating the estimated cost for each LLM request based on the specific model used and WorkflowAI's current pricing data.

How to track costs

Using the Playground

...

Using the Monitor section

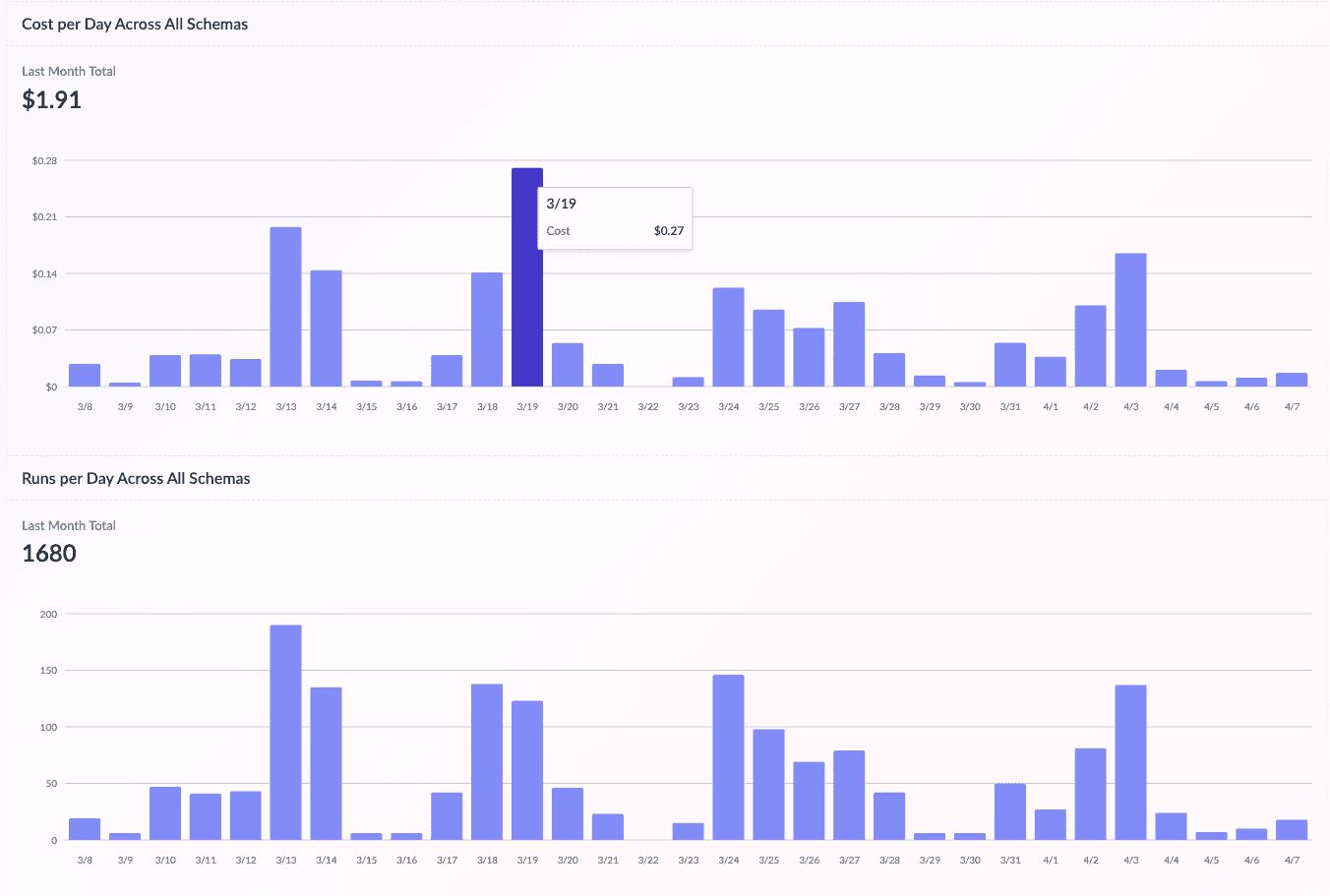

On WorkflowAI, you can track costs in the Cost page from the Monitor section:

Programmatically

You can also track costs programmatically in the response from the API when running an agent. View the Cost Metadata page for more details.

How is this guide?