Pricing

WorkflowAI offers a pay-as-you-go model, like AWS. There are no fixed costs, minimum spends, or annual commitments. You can start without talking to sales.

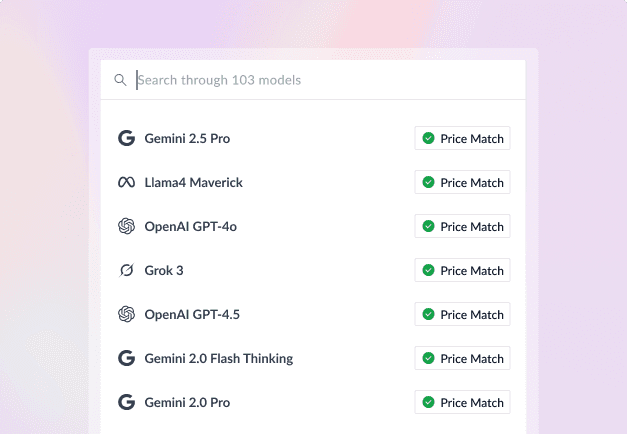

Simple pricing promise

WorkflowAI matches the per-token price of all LLM providers, so WorkflowAI costs the same as using providers directly.

Price per model

[TODO: component with @guillaume]

| model | price per 1M input | price per 1M output |

|---|---|---|

| gpt-4o | $75 | $75 |

| claude-3-5-sonnet | $75 | $75 |

| gemini-2.0-flash-exp | $75 | $75 |

| llama-3.1-8b-instruct | $75 | $75 |

| mistral-7b-instruct | $75 | $75 |

What we charge for

| Paid | Free |

|---|---|

| Tokens used by your agents | Data storage |

| Tools used by your agents | Number of agents |

| Users in your organization | |

| Bandwidth or CPU usage |

How we make money

Behind every AI model, there are two ways to pay for inference: buy tokens from providers, or rent GPU capacity directly to run models yourself.

Individual customers typically buy tokens because their usage is sporadic: they can't justify renting GPUs that sit idle most of the time. Even when GPUs aren't processing requests, you're still paying for them.

WorkflowAI pools demand from many customers, creating consistent 24/7 throughput that maximizes GPU utilization. This allows us to rent GPU capacity directly instead of buying tokens, securing much better rates.

We pass the standard token pricing to you while capturing the cost savings from efficient GPU utilization. That's how we match provider prices while staying profitable.

FAQ

How is this guide?